BENGALURU: Many startups focused on AI development, influenced by Chinese AI startup DeepSeek, are initiating projects and allocating resources towards creating indigenous models for India.

“The focus is not just on building LLMs (large language models) but on developing advanced models for artificial general intelligence (AGI) eventually. We can begin with 10 companies, including ourselves, TCS and Infosys, each contributing $20 million. If govt matches multiples of this funding, it could create a $600-$800 million fund, enough for us to build a frontier model,” Fractal Analytics founder and CEO Srikanth Velamakanni told TOI.

He said such an initiative could also attract the finest talent from across the world as many of the AI researchers globally are Indians. Fractal Analytics built four small language models (SLMs) – Vaidya, Kalaido, Marshall, and Ramanujan. Velamakanni added the company currently plans to open source Ramanujan, a complex reasoning model that supposedly beats OpenAI’s o1, specifically built for higher-order tasks such as Olympiad-level mathematics and chess.

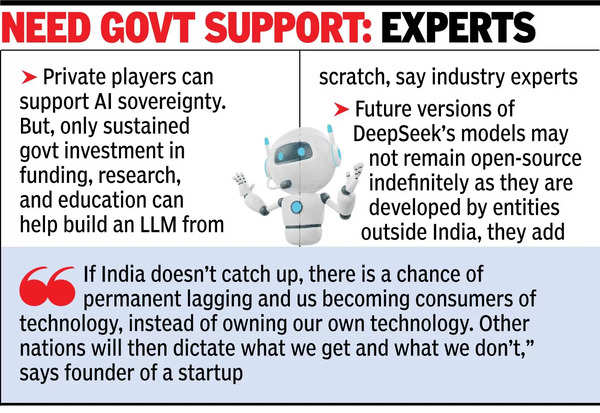

Industry experts told TOI that while private players can support AI sovereignty, only sustained govt investment in funding, research, and education can help build a LLM from scratch. They added that future versions of DeepSeek’s models may not remain open-source indefinitely, especially as they are developed by entities outside India.

Building LLMs from scratch requires major investments in hardware, data centres, talent, and innovation. While DeepSeek’s R1 model was built under $6 million, this excludes expenses for hardware, years of capital investment, and skilled workforce it deployed over the years.

“If India doesn’t catch up, there is a chance of permanent lagging and us becoming consumers of technology, instead of owning our own technology. Other nations will then dictate what we get and what we don’t. It’s just how important it was for India to build its own nuclear technology,” Paras Chopra, founder of Lossfunk, told TOI.

Chopra, who recently sold his previous company for $200 million, founded Lossfunk as a tinkering project to build on AI models as part of a larger residency programme in the space.

Prayank Swaroop, partner at Accel, said India still lacks strong domestic AI skill sets and capabilities. “Ideally, going forward, our strides here should be less reactive and more naturally aligned. Today it is AI, tomorrow there could be game-changing disruptions in quantum computing. We need to be ready for such a future,” Swaroop added.

Over the past year, India split into two factions – one that wants to build indigenous LLMs from scratch and the other that wants to build SLMs with fewer parameters and which focus on specific applications. Homegrown AI startup Sarvam AI’s platform was trained on 2 billion parameters, with an emphasis on Indian languages. R1 was trained on 671 billion parameters and does not focus on any specific use case.